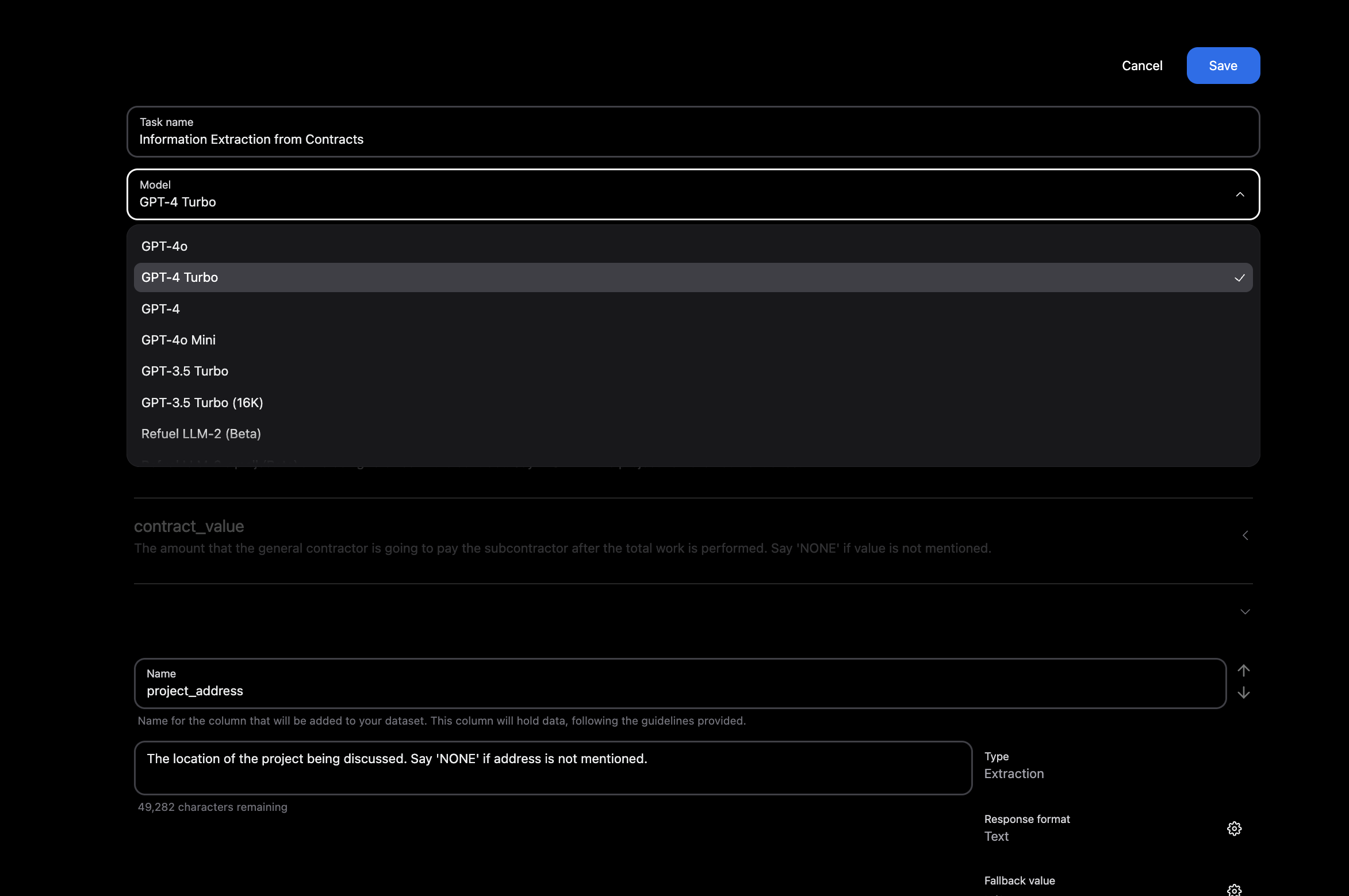

Step 1: Label the dataset using a larger model

Once you have the task and dataset set up in Refuel that you would like to distill, select the larger model you would like to use to label the dataset with for distilling knowledge. Hit “Run Task” to start labeling and generating the training dataset.

Once you have the task and dataset set up in Refuel that you would like to distill, select the larger model you would like to use to label the dataset with for distilling knowledge. Hit “Run Task” to start labeling and generating the training dataset.

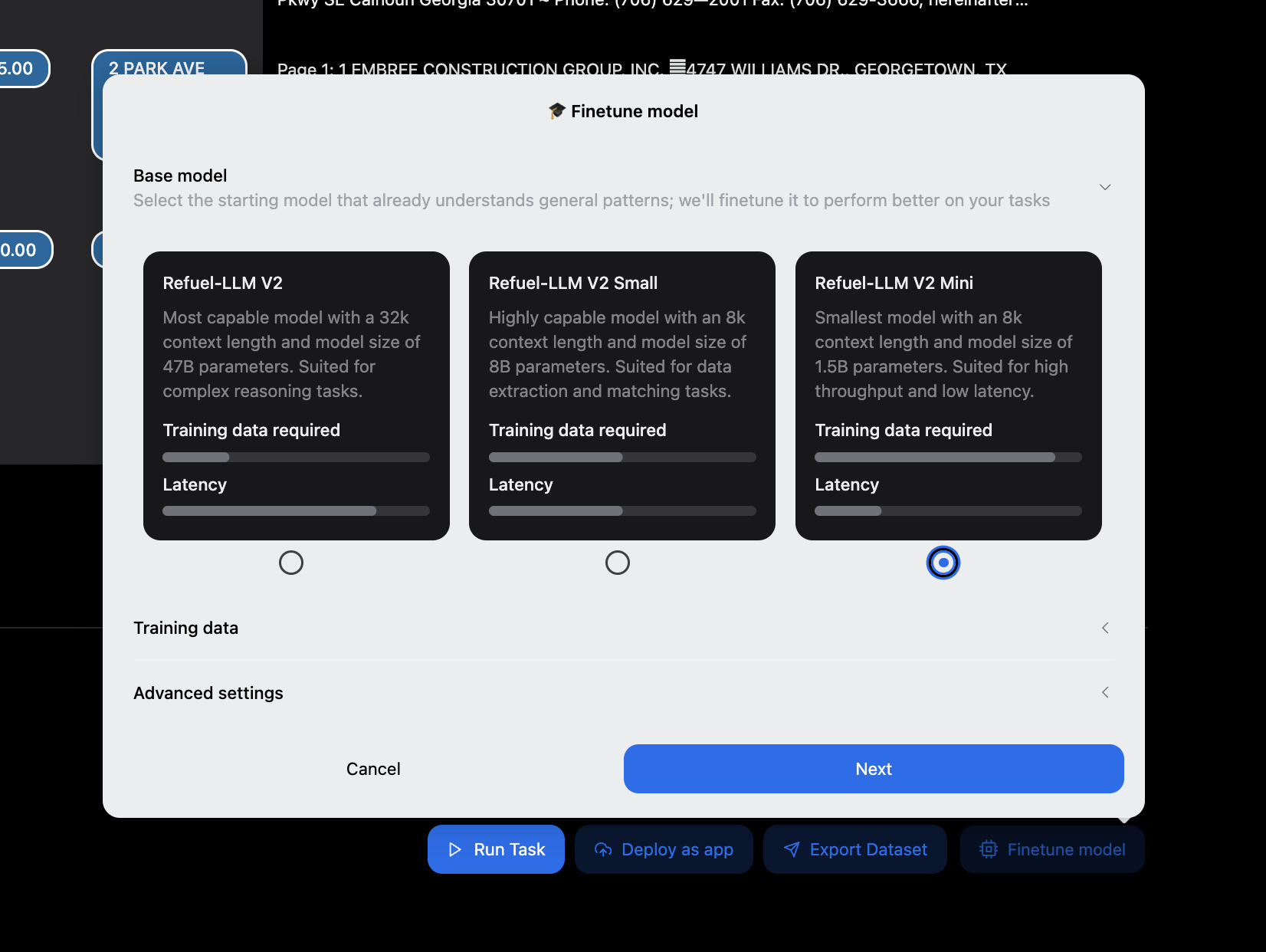

Step 2: Select the base model to distill into

Click “Finetune Model” and select the base model you would like to distill into. We recommend using the “Refuel LLM V2 Mini” model as the base model for distillation.

Click “Finetune Model” and select the base model you would like to distill into. We recommend using the “Refuel LLM V2 Mini” model as the base model for distillation.

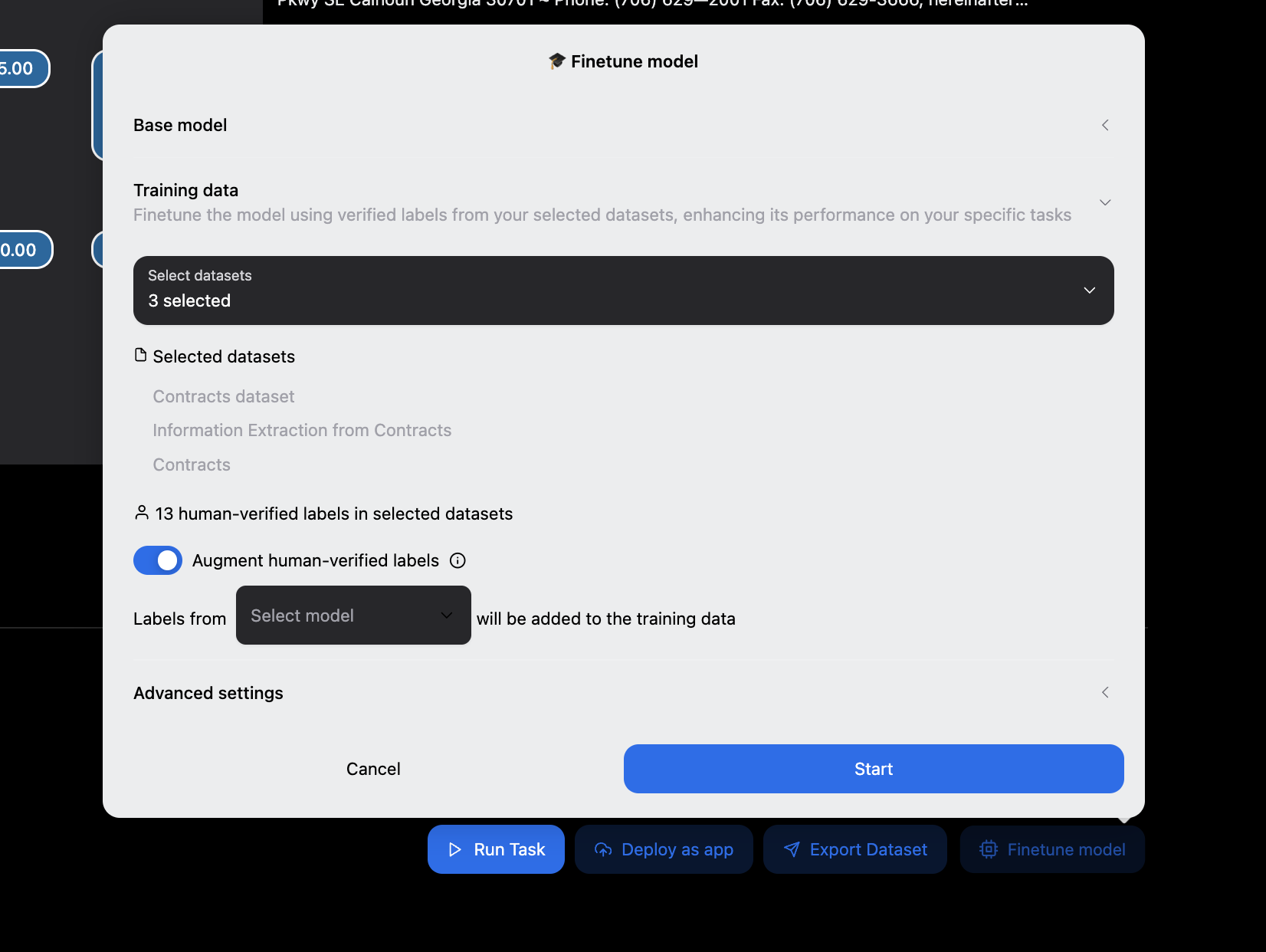

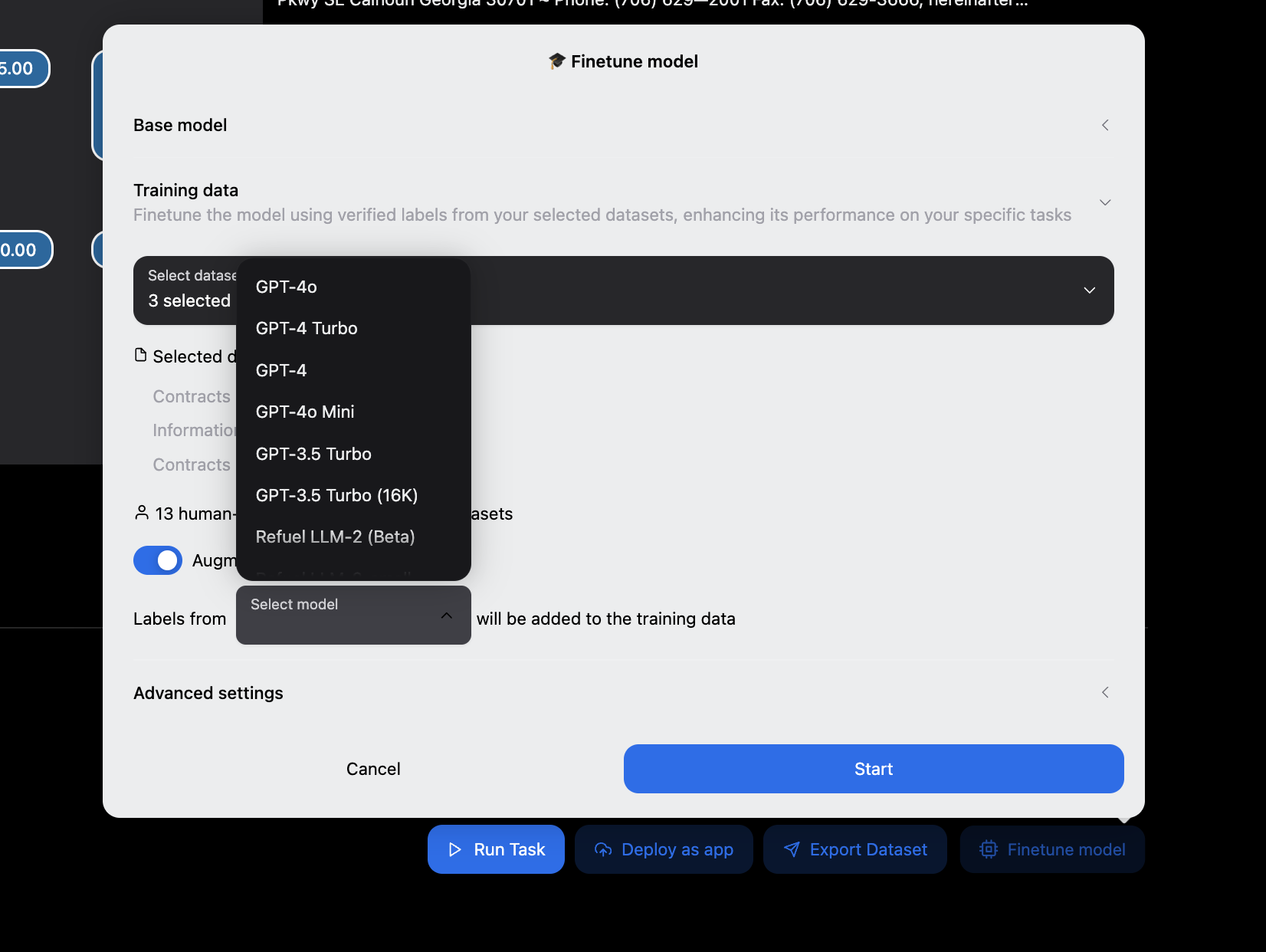

Step 3: Enable data augmentation and start distillation

Once you have selected the base model, enable “Augment human-verified labels” and choose the larger model you used to run the task earlier with for distilling knowledge from. Hit “Start” to begin the distillation process!

Once you have selected the base model, enable “Augment human-verified labels” and choose the larger model you used to run the task earlier with for distilling knowledge from. Hit “Start” to begin the distillation process!